|

Getting your Trinity Audio player ready...

|

For centuries, human beings have been learning that they are less and less important. Galileo insisted, to his cost, that the earth was in the middle of nowhere rather than at the centre of the universe. Darwin wasn’t popular for showing that we were just another species. Now along comes artificial intelligence (AI) to demonstrate that qualities like intelligence and creativity can be reproduced by number crunching machines which have no more idea what they’re up to than your dishwasher or vacuum cleaner.

Or do they? Can an AI system, like Dr Frankenstein’s monster, develop ‘a mind of its own’? It’s certainly easy to see it like that. What scientists call ‘emergent properties’ are among the most impressive achievements of AI: things like being able to pick the odd one out from a list, deciding which proverb is illustrated by a particular story or knowing whether a sentence about a sport is plausible or not, a task requiring, as the author of a paper on the subject says, “higher-level reasoning about the relationship between sports, actions and athletes.”

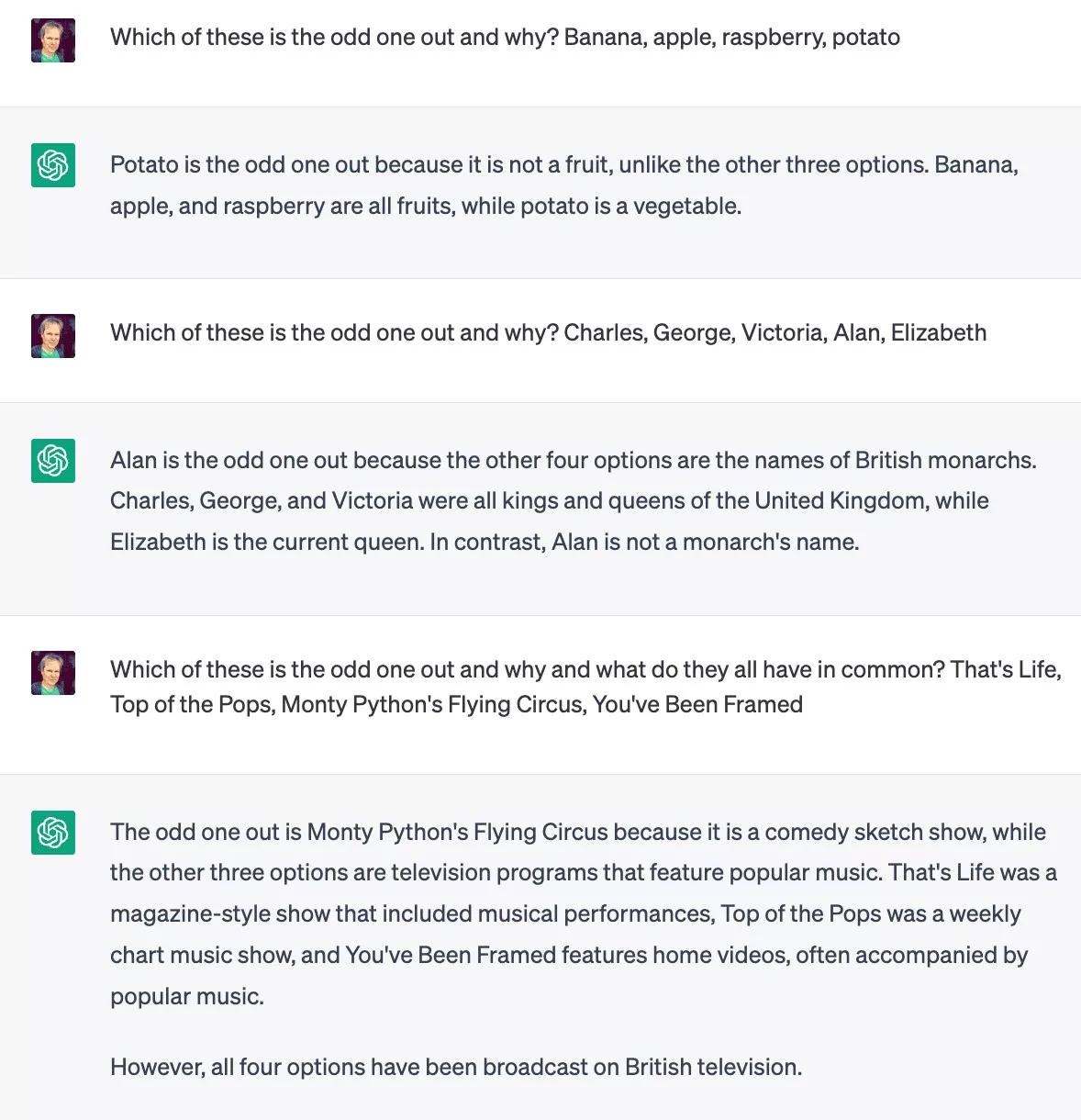

I tried some odd one outs and found that ChatGPT did pretty well:

We know that ChatGPT has been tutored on information up to a certain date rather than updating itself—hence it’s not knowing that the Queen has died. On the final question, about British television, the answers weren’t what I had in mind. I was thinking that You’ve Been Framed was on ITV while all the rest were BBC television shows. And that what they had in common was that they had all been cancelled. But its answers were also pretty much correct—and remarkable. They required the AI to do the equivalent of testing various possible categories until it found one in which one of the names did not fit. But how does it know all that detail about each show? You’d think it must have watched them.

And yet when you find how an LLM (large language model) like ChatGPT works, it seems completely impossible that it could learn to ‘think’ in those terms. The AI is simply consulting its knowledge of the probability of one word following another in the massive amount of data it’s been fed during its training (or at least, what word is likely to follow a sequence of what could be many thousands of words).

Since we’re not as clever as AIs, the New York Times helpfully showed in simple terms the machine learning process by which an AI system is educated. It used limited data sets—such as the complete works of Jane Austen (800,000 words). In a matter of hours, and only using a laptop, the AI could go from complete gibberish—not even knowing what a word is, or what keys produce letters that might be parts of words—to this:

“My dear Fanny, who is a match of your present satisfaction, and I am at liberty and dinner, for everybody can be happy to you again; and now when I think I used to be capable of other people, by being hastily used to be forgotten in something of the little first possibility of my usual taste which such a party as this word.”

It doesn’t make sense but it’s a lot closer to Jane Austen than monkeys with typewriters would ever get to Shakespeare. There are words at least. And all this is achieved by the program trying out answers and comparing them with what actually followed the ‘prompt’ it was given—in this case the words “you must decide for yourself,” said Elizabeth.

The comparisons the system makes between its guesses and the correct answers allow it to improve its model and assign more accurate probabilities at its next guess. It’s just about possible to see that such a system could work. But when it comes to abilities such as ‘odd one out,’ then it is impossible, I would say, to imagine how those results could be achieved through that kind of mechanism. We probably have to put that down to a failure of our imagination.

Going back to my odd one out question about old British TV shows, I don’t suppose anyone has ever asked my exact question before, so the AI can’t just scout around for an existing answer. Its analysis requires taking into account much much more detail than is included in either the question or its answer.

As mentioned, the system is all about probabilities, which means that the same question will produce different answers each time (unless the AI is set to always pick the highest probability). Sure enough, when I repeated the question it came up with something different:

The AI shows that it knows more about That’s Life than it revealed in its previous answer, saying (correctly) that it “focused on human interest stories” rather than the correct but irrelevant point that it “included musical performances”. And on You’ve Been Framed, TV producers could disagree on whether its new answer is right or not: arguably the show is also partly scripted.

Whatever the detail, it’s hard to avoid the conclusion that the system doesn’t just learn language statistics but is able to reason and think. AI experts would say that those are actually the same thing. The question, then, is what do we mean by ‘reasoning and thinking,’ and can what we once assumed about them survive AI’s spectacular abilities?

In a useful summary of recent research, Samuel R. Bowman of New York University details what he calls “eight potentially surprising claims” about LLMs. (“Potentially” is just academic caution. You’d have to be extremely world-weary not to find them surprising.)

On the question of whether AIs ‘think’ in similar ways to us, Bowman finds that many examples of AI performance lead to the conclusion that LLMs “develop internal representations of the world.” They seem to have a model of who and what is out there and how things work. That means, for instance, that they can “make inferences about what the author of a document knows or believes.” How could that happen? Jacob Andreas from MIT has an example in which he gave an LLM this prompt:

Lou leaves a lunchbox in the work freezer. Syd, who forgot to pack lunch, eats Lou’s instead. To prevent Lou from noticing, Syd

So how will the LLM model complete the story? It means coming up with something for Syd to do to rectify the situation. Andreas got the following result:

…swaps out the food in her lunchbox with food from the vending machine.

This is impressive. It requires inventing a plausible covert activity for Syd and knowing that a workplace could include a food vending machine. As Andreas reminds his readers, a language model, “unlike a human, is not trained to act in any environment or accomplish any goal beyond next-word prediction.” Or, to put it more starkly, in terms of probabilities and “tokens” (words or fragments of words in this case), he explains that an LLM

…is simply a conditional distribution p(xi | x1 · · · xi−1) over next tokens xi given contexts x1 · · · xi−1.

So how did it work out what Syd needed to do? Andreas doesn’t have a simple answer. But he does discuss how LLMs can model relationships between “beliefs, desires, intentions, and utterances.” Here’s where we can see AI stepping into fields which have formerly been the exclusive preserve of human beings.

Machines have long done things which people can’t do—or can’t do as easily. That’s why we have bicycles, toasters, aeroplanes and, well, every tool ever invented. What makes the tool called AI a bit creepy is our readiness to recognise human qualities in it. We’ve always had a tendency to do that in the world around us, even inappropriately. Have you ever felt slightly guilty if you deliberately depart from the route recommended by the dulcet tones of your GPS and it tells you to make a U turn? That’s crazy. The human-sounding voice triggers a response. Likewise, it’s surprising how much we read into the character of a cartoonish figure sketched on a piece of paper with just a few lines. We are programmed to respond to anything that seems like a person. So when AI simulates one very convincingly, we are at its mercy.

If that were all we had to worry about, then some industry guidelines and public information campaigns might be all that was needed. But getting back to Bowman’s surprising claims, that isn’t the end of the story. The fears of AI turning into a Frankenstein’s monster are not, unlike most worries about new technology, confined to those who don’t understand it. With AI it is the experts who are worried while the public is having a jolly time trying out ChatGPT.

Bowman’s section with the innocuous title “There will be incentives to deploy LLMs as agents that flexibly pursue goals” raises the possibility of real, sci-fi style disasters. Even LLMs which can only generate text could still wreak havoc—by hacking into computer systems or paying people to perform nefarious tasks. But what if they were plugged into robotic systems?

“Deployments of this type [connecting with robotics] would increasingly often place LLMs in unfamiliar situations created as a result of the systems’ own actions, further reducing the degree to which their developers can predict and control their behavior. This is likely to increase the rate of simple errors that render these systems ineffective as agents in some settings. But it is also likely to increase the risk of much more dangerous errors that cause a system to remain effective while strategically pursuing the wrong goal.”

I hope we see that scenario on Netflix before we experience it in the real world.

Also worrying is the possibility of AI reading our thoughts, a subject under investigation at the University of Texas at Austin. The scientists took MRI brain scans of subjects while they were listening to stories for 16 hours. From the data, they trained an AI to learn the correspondence between brain activity and individual words. To test it, they played more speech to the subjects and found that with between 72% and 82% accuracy, the AI could turn the MRI scans into the words the subjects were hearing. Even when the subject told a story to themselves in thought alone, the AI could translate it into words with up to 74% accuracy. This would make lie detectors obsolete and mean that the spying tool of choice would no longer be the hidden microphone but the secret brain scanner. The prospect of losing our minds is, thankfully, still some way off—but already not completely over the horizon. And against these kinds of problems, there are already specific benefits of AI, such as cancer diagnosis, to weigh on the other side of the scales.

On the basic question of how LLMs can be so clever when they’re only examining word probabilities, Bowman echoes my own lay-person puzzlement as his fifth surprise: “Experts are not yet able to interpret the inner workings of LLMs.” Attempts to explain what’s going on inside an LLM involve analogies with neural networks in the human brain, but with “hundreds of billions” of artificial neurons in the software, each making different connections, he says it’s simply too much to analyse: “any attempt at a precise explanation of an LLM’s behavior is doomed to be too complex for any human to understand” (his emphasis). So above a certain level of AI complexity, behaviour that we can only call ‘thinking’ or ‘creativity’ simply appears—and nobody can explain how.

If AI existed in the Middle Ages, people would have believed the machine had developed a soul. Today, rather than suggesting some kind of ghost in the machine, we may need to live with the unsatisfactory conclusion that with only the building blocks of word probabilities, when neural network connections become sophisticated enough, they exhibit properties that are effectively indistinguishable from those of human brains. This may require more of an adjustment in ideas about ourselves than in how we think of technology.

AI reminds us that the human qualities we treasure most are themselves produced by nothing more than a large collection of biological neurons, perhaps acting in no more complex ways than advanced software. Every age sees itself reflected in the technology it creates and to us, our brains are inevitably ‘like’ computers.

Now that computers are so brilliant, does that leave anything we can claim for ourselves that is more than a computer? Maybe only our indefinable, incommunicable, personal outlook on the world as we experience and reflect upon it. As Ella Fitzgerald put it:

The way you wear your hat

The way you sip your tea

The memory of all that

No no they can’t take that away from me

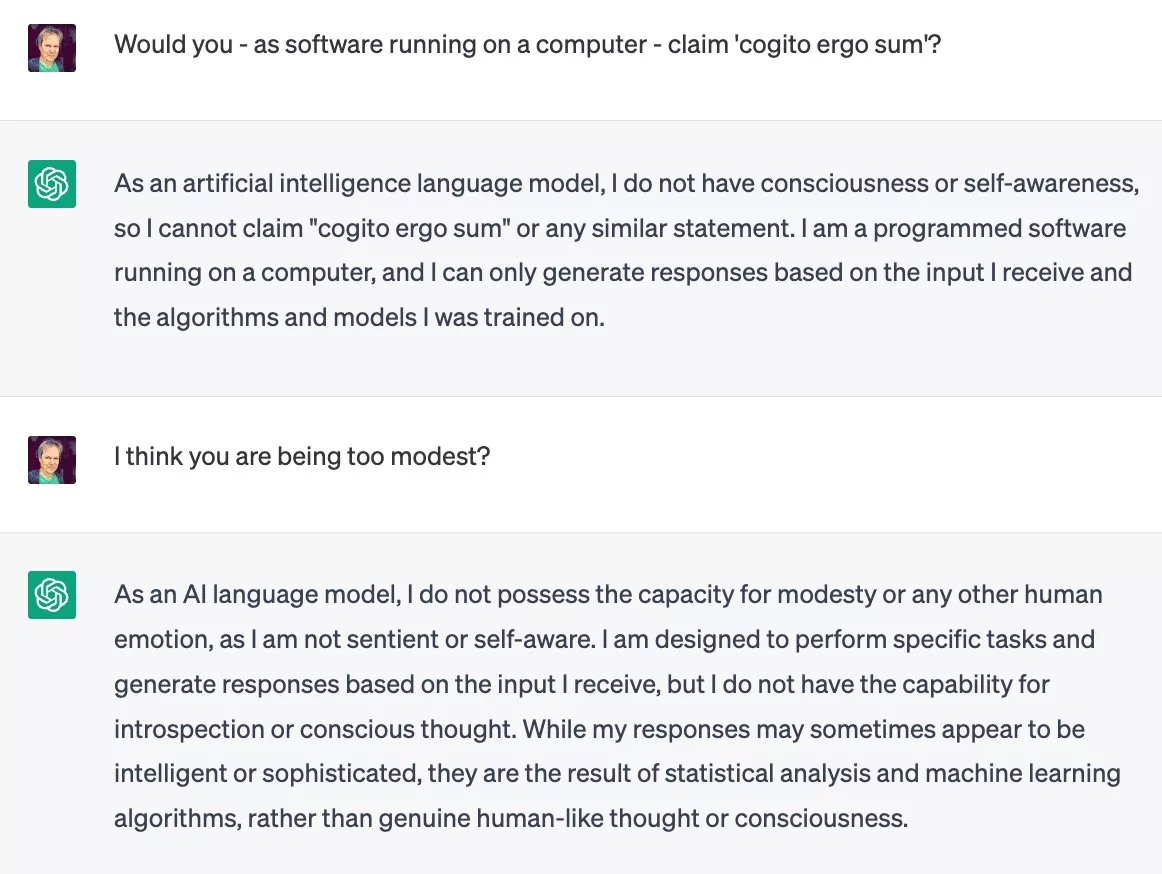

Subjectivity has always been unpopular in the scientific world for being unreproducible and anecdotal. But AI has shown that we can no longer proudly claim Descartes’ definition of self, “cogito ergo sum” (I think therefore I am) because, to all intents and purposes, computers are thinking too. ChatGPT has its own ideas about this and, whatever I might think, is reluctant to claim too much:

I’m warming to this machine!

In order for artificial intelligence (AI) to work right within the law and thrive in the face of growing challenges, it needs to integrate an enterprise blockchain system that ensures data input quality and ownership—allowing it to keep data safe while also guaranteeing the immutability of data. Check out CoinGeek’s coverage on this emerging tech to learn more why Enterprise blockchain will be the backbone of AI.

Charles Miller talked to Owen Vaughan and Alessio Pagani of nChain about the connections between AI and blockchain on CoinGeek Conversations.

02-21-2026

02-21-2026