|

Getting your Trinity Audio player ready...

|

This post is a guest contribution by George Siosi Samuels, managing director at Faiā. See how Faiā is committed to staying at the forefront of technological advancements here.

TL;DR: I’ve been tracking Salesforce’s Agentforce 360, and what’s interesting is how it reframes “suite compute.” Instead of a bundle of features, the suite starts to feel like a living mesh of agents that sense, plan, and act across the stack. Maybe it’s less about a single breakthrough and more about composability, trust, and governance becoming the real leverage. If enterprises can wire those guardrails, the rest could follow.

- Salesforce’s agentic revolution

- From chatbots to enterprise brains

- AI Agents transforming compliance

- Agents at the core of workflows

- The next phase for enterprise agents

Why Agentforce 360 matters in the AI-era of enterprise suites

Recently, I’ve been thinking about the long-standing tension in big orgs: standardize to scale vs differentiate to win. Suites gave coherence but calcified. Point tools drove novelty, but fractured. Agentic artificial intelligence (AI) might be the “and/both” here—an adaptive layer that coordinates across data, workflows, and user interface (UI) without forcing a new monoculture.

Agentforce 360 reads like Salesforce’s bet on that: an agentic layer woven through the suite so intelligence isn’t an add-on, it’s ambient. Not fully settled yet, but you can see the contours. In practical terms, Salesforce is positioning agents that plug into service, sales, commerce, IT, analytics, and more, with multi‑model orchestration that makes large language models (LLMs) swappable and combinable via explicit contracts. A Bring Your Own LLM stance sits alongside Salesforce‑managed models to balance trust, latency, and specialization. Governance and observability are treated as first‑class concerns in Agentforce 3 to keep agent sprawl legible, and Slack is cast as an “agentic OS,” turning conversational UI into the access layer to this mesh.

In short, it’s less “bolt an LLM onto CRM” and more “turn the suite into an agentic fabric.” Still processing, but the shift feels material.

Dissecting the architecture: embedding rather than attaching

What I keep noticing: shallow AI layers (a chatbot here, a predictive overlay there) rarely change the system. They decorate. Embedding agents into the core is different, more like rewiring where decisions happen.

Agentforce leans into Data Cloud, platform services, and MuleSoft so agents can reason with context and actually take action. The Atlas Reasoning Engine handles decomposition, chaining, and decision logic, which means agents don’t just answer; they plan. Interfaces are explicit, with input and output contracts that make models swappable parts in a larger graph.

Retrieval‑augmented generation grounds outputs in enterprise data to reduce drift and hallucinations. Meanwhile, built‑in tracing and command centers aim to give operators real visibility into flows and failures.

Maybe that’s the quiet move: treat LLMs as components inside governed orchestration, not endpoints to worship.

The leap from predictive AI to agentic AI, and its enterprise challenge

Historically, enterprise AI predicted. Useful, but passive. Agentic AI acts. It collaborates, hands off work, escalates, and adapts, which shifts the failure modes. Inter‑agent coordination becomes a core systems problem, misplanned actions cost more than a bad forecast, and governance can’t be bolted on after the fact—Einstein Trust Layer matters here. At the same time, scale and latency pressures compound as agents multiply, even as Salesforce points to billions of monthly predictions today. Composability becomes the hedge against a new ossified monolith.

Maybe that’s why “embedding” feels like the only path that scales. You need primitives, not plugins.

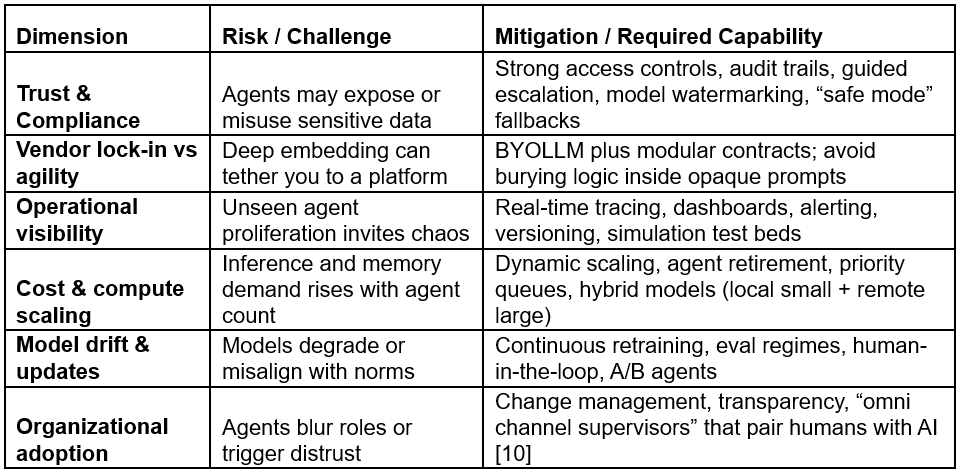

Strategic risks & trade-offs for the enterprise buyer

If you’re evaluating this (especially with a regulated or mission‑critical lens), a few dimensions stand out:

Treating Agentforce 360 like a feature upgrade probably under‑shoots it. It’s closer to an operating shift.

Implications for financial, blockchain, and regulated enterprises

I’ve been anchoring this to higher‑regulation contexts because the constraints force clarity. Some agents may need to run in specific geographies or private networks to satisfy data locality and sovereignty requirements; Salesforce points to options like running Anthropic Claude models inside its trust boundary for these cases. Explainability and auditability become non‑negotiable, with traceable reasoning preferred over opaque chains of prompts.

For blockchain contexts, agents that read chain state, manage wallets, or trigger oracles will require custom connectors. Resilience and adversarial safety move to the forefront to guard against prompt injection and adversarial queries in financial‑grade settings. And regulation alignment needs to be wired to Know Your Customer (KYC), anti-money laundering AML, and similar policies by design rather than added as afterthought filters.

Maybe the headline: agents don’t just “add” to regulated environments—they reshape what adoption even means.

What success looks like—and where early signals already exist

If this trajectory holds, success will look like agents woven into core workflows rather than sitting on the sidelines as optional helpers. Teams should feel a meaningful reduction in repetitive work, with smooth handoffs between agents and humans. You’d expect emergent ecosystems to appear, where marketplace agents and partner modules plug into the mesh.

Above all, operations should be observable and improvable through instrumentation. Early signals point in this direction, from reports of large support‑task reductions driven by agents, to security and compliance agents receiving first‑class treatment, to a Command Center dedicated to monitoring agent health and flows. Vertical adopters such as Propel Software in proptech and PLM suggest how this embeds into product‑data loops, while multivendor partnerships with OpenAI and Anthropic hedge against single‑model bets.

Signals, not guarantees—but the vector seems clear.

What readers in blockchain, fintech, and enterprise should watch

A few markers may tell us where this goes. First, watch for agent interoperability standards, such as MCP and A2A, that let agents coordinate and offload reliably—a direction Agentforce 3 already nods to.

Second, look for open agent marketplaces that turn agents into reusable modules instead of bespoke snowflakes. Third, track cross‑chain and oracle integrations that help agents reason across on‑chain and off‑chain state. Fourth, expect more composable LLM governance layers that enforce policy, audit, and safe fallbacks. Finally, watch for benchmarks that evaluate enterprise agent tasks beyond toy scenarios, including efforts like SCUBA aimed at CRM fidelity.

If these mature, the foundation gets sturdier.

Closing insight

Maybe the simplest way to hold this: Agentforce 360 isn’t just Salesforce’s gen‑AI moment—it’s a bet that agents become the substrate of enterprise logic. The near‑term question isn’t “adopt or not,” it’s “how quickly can we wire the guardrails so the system can learn without breaking us?”

I’m still processing, but the pattern feels familiar: standardize the primitives so differentiation can compound on top. You might already see the first agents gathering around your stack. It’s worth paying attention to.

In order for artificial intelligence (AI) to work right within the law and thrive in the face of growing challenges, it needs to integrate an enterprise blockchain system that ensures data input quality and ownership—allowing it to keep data safe while also guaranteeing the immutability of data. Check out CoinGeek’s coverage on this emerging tech to learn more why Enterprise blockchain will be the backbone of AI.

Watch: Adding the human touch behind AI

02-18-2026

02-18-2026