|

Getting your Trinity Audio player ready...

|

Major artificial intelligence (AI) foundation model developers need to be more transparent with how they train their models and their impact on society, says a new report from Stanford University.

Through Stanford Human-Centered Artificial Intelligence (HAI), the California-based university says that these prominent AI companies are becoming less transparent as their models become more powerful.

“It is clear over the last three years that transparency is on the decline while capability is going through the roof. We view this as highly problematic because we’ve seen in other areas like social media that when transparency goes down, bad things can happen as a result,” said Stanford professor Percy Liang.

Foundation model developers are becoming less transparent, making it difficult for researchers, policymakers, and the public to understand how these models work, as well as their limitations and impact. Scholars assess the transparency of 10 developers. https://t.co/AkxI27Jhm0 pic.twitter.com/h09p8CZD3b

— Stanford HAI (@StanfordHAI) October 18, 2023

While regulators, researchers, and users continue to demand transparency, AI model developers have stood firm against these demands. When it launched GPT-4 in March, OpenAI said it would remain tight-lipped about how it works.

“Given both the competitive landscape and the safety implications of large-scale models like GPT-4, this report contains no further details about the architecture (including model size), hardware, training compute, dataset construction, training method, or similar,” it said in the whitepaper.

The Stanford team says that this opaque nature of all prominent AI foundation models leads to consumers being unaware of their limitations. Regulators are also unable to formulate meaningful policies for the sector.

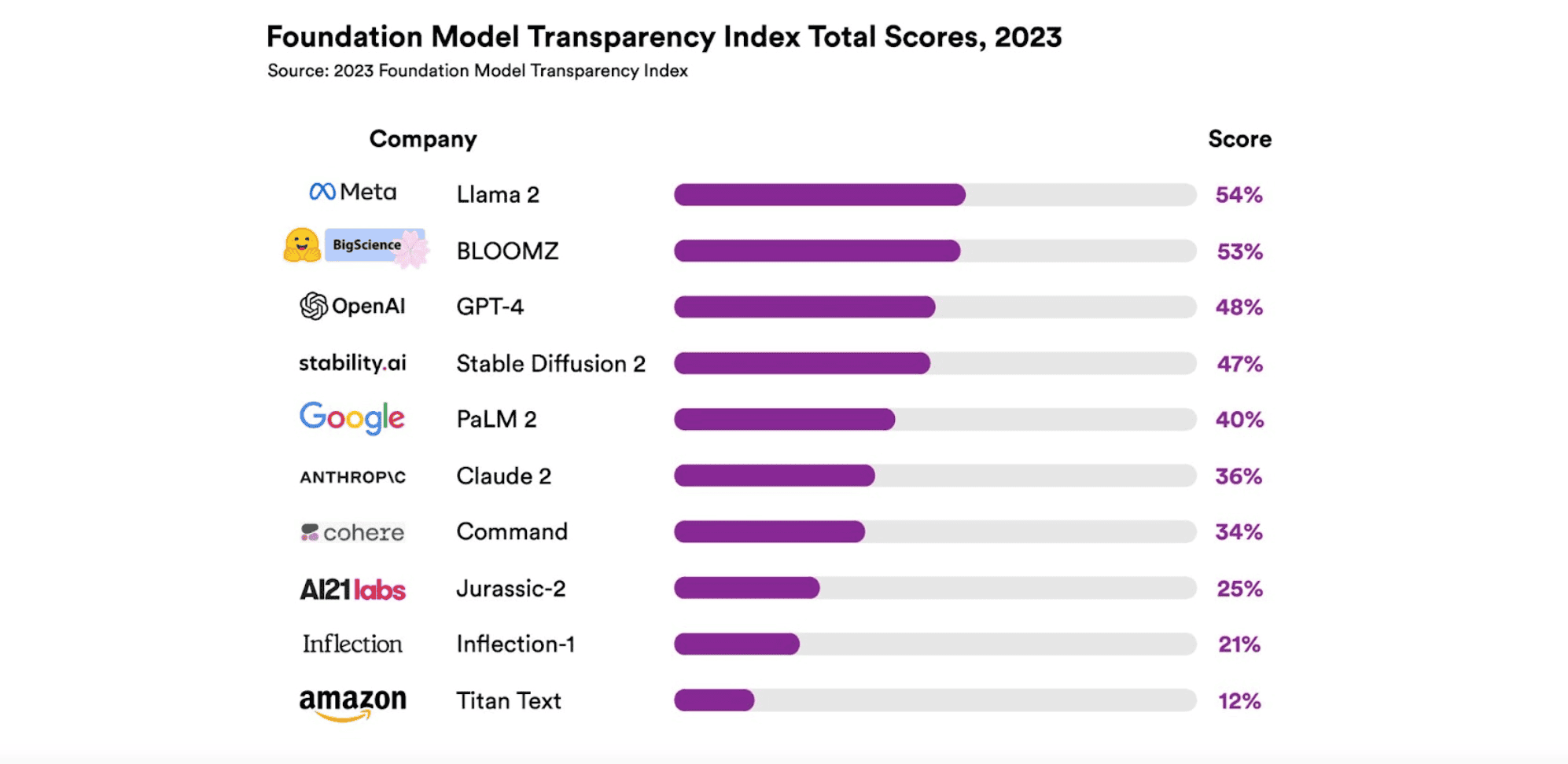

The researchers, in partnership with experts from the Massachusetts Institute of Technology (MIT) and Princeton, also announced the launch of the Foundation Model Transparency Index. It evaluates 100 aspects of AI model transparency and rates the most prominent players in the field.

While they all received poor ratings, Meta’s (NASDAQ: META) LLaMA ranked first at 54% with high ratings in access, distribution, and methods. OpenAI’s GPT-4, which powers the paid version of its ChatGPT chatbot, ranked third at 48%. Amazon’s (NASDAQ: AMZN) lesser-known Titan Text ranked the lowest at 12%.

“What we’re trying to achieve with the index is to make models more transparent and disaggregate that very amorphous concept into more concrete matters that can be measured,” commented Rishi Bommasani, one of the Stanford researchers behind the report.

In order for artificial intelligence (AI) to work right within the law and thrive in the face of growing challenges, it needs to integrate an enterprise blockchain system that ensures data input quality and ownership—allowing it to keep data safe while also guaranteeing the immutability of data. Check out CoinGeek’s coverage on this emerging tech to learn more why Enterprise blockchain will be the backbone of AI.

Watch: AI truly is not generative, it’s synthetic

02-15-2026

02-15-2026