|

Getting your Trinity Audio player ready...

|

Shortly after the state of New Hampshire was hit with one of the first widespread artificial intelligence (AI)-generated disinformation schemes around the upcoming presidential election, Meta (NASDAQ: META) announced that they will be increasing their efforts to combat AI-generated misinformation campaigns.

In their recent blog post, Meta said they are working on ensuring AI-generated content is easily identifiable across its platforms (Facebook, Instagram, and Threads).

Meta has begun implementing safeguards around AI-generated content on its platforms and operates by labeling images created through their own generative AI software with an “Imagined with AI” watermark.

However, the company says its goal is to extend this transparency to content generated by other companies’ tools, such as those created by Google (NASDAQ: GOOGL), OpenAI, Microsoft (NASDAQ: MSFT), Adobe (NASDAQ: ADBE), Midjourney, and Shutterstock (NASDAQ: SSTK). To make that possible, Meta will work with industry partners to create common technical standards for identifying AI content, including AI-generated video and audio.

Meta’s strategies for identifying AI-generated content

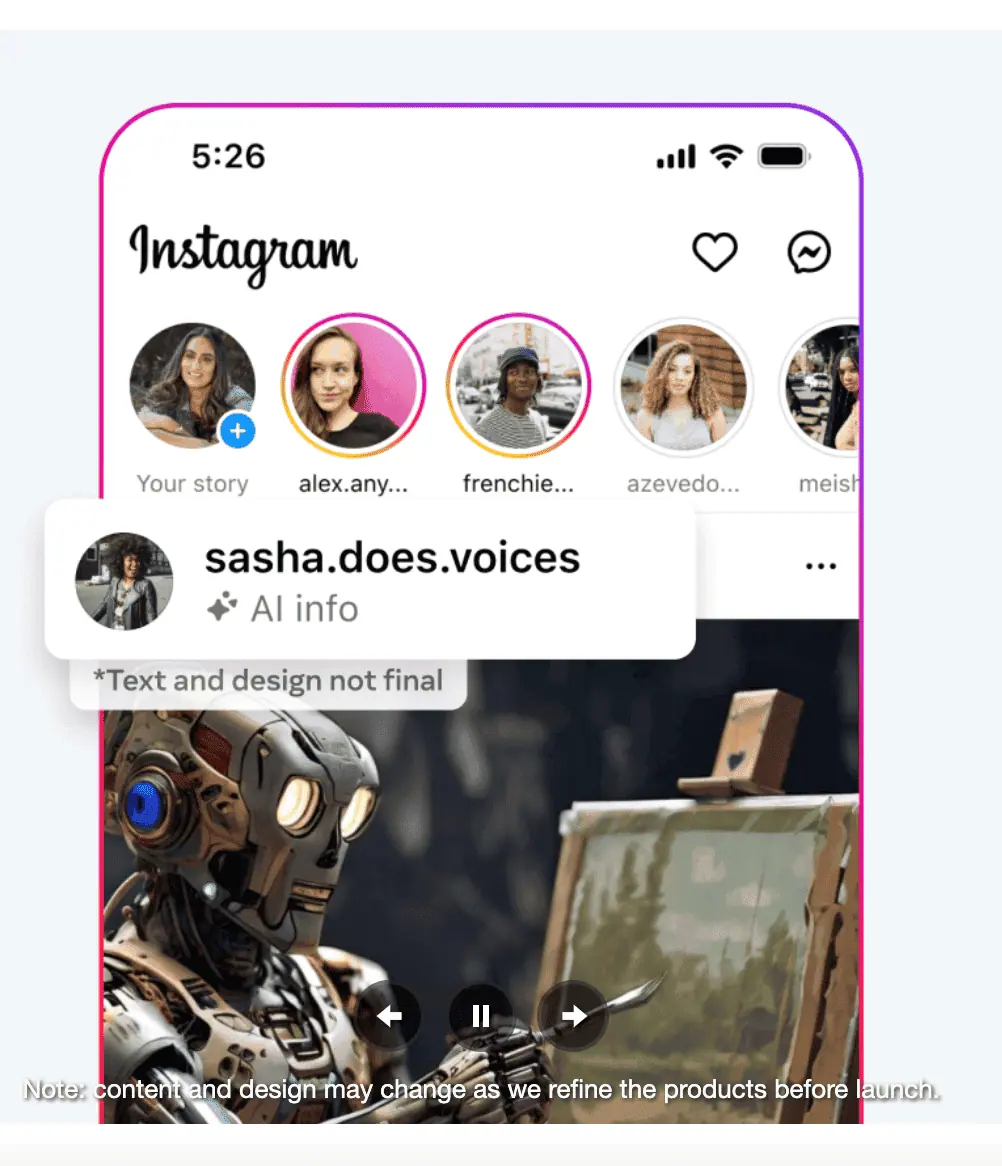

To help the world easily identify AI-generated content, Meta is implementing visible markers on images, invisible watermarks, and metadata embedded within files to signal AI was used to create the content.

Although solutions for identifying AI-generated images are on the rise and are abundant to the point where Meta can identify these images created by other Generative AI providers, it acknowledges that those exact solutions and identifiers are not as widespread regarding AI-generated audio and video.

While they do not have a blanket solution for audio and video content created by other service providers at the moment, when it comes to individuals sharing AI-generated content created by one of Meta’s tools on platforms that it owns, users will be required to have a disclosure on their post that indicates that the content was AI-generated. If users can circumvent this requirement, Meta says that users will face penalties for non-compliance.

The fight against digital disinformation

Some reports say that a recent deepfake of President Joe Biden that went viral on Facebook prompted the board overseeing content moderation to mandate changes to Facebook’s policy. Although the video was not legitimate, it did not violate Meta’s policy on manipulated media because it was not created using artificial intelligence and was allowed to remain on Facebook.

Meta’s current policy restricts only those pieces of content that show individuals saying words they did not say if created using AI or machine learning techniques. However, the oversight board recommends a broader approach, suggesting that Meta’s policy should cover all audio and visual content that portrays people doing or saying things they never did, regardless of the creation method.

Although a few AI-generated induced instances of deepfakes going viral on social media have already taken place, the companies that create these generative AI systems must respond quickly and, even more importantly, be proactive if possible.

With deepfake technology rapidly evolving, the ability to create highly realistic, nearly indistinguishable fake content poses a significant threat to many people, states, and governments, especially concerning high-profile individuals such as politicians. In the fiercely competitive political arena, the risk of becoming the victim of a deepfake attack is high, with adversaries seeking to undermine each other’s credibility and influence voter perceptions.

We saw such an attack unfold at the end of January with a robocall scam that used AI to mimic the voice of President Joe Biden, discouraging them from participating in the New Hampshire primary election. In this instance, the robocall scam was eventually identified as being illegitimate, but that event is most likely just a sample of one of the many AI-generated electioneering tactics we will see this year.

Industry-wide AI standards

As the technology behind AI-generated images, videos, audio, and text continues to evolve, every generative AI provider will need to take steps to ensure that AI-generated content is easily identifiable. This becomes increasingly important during an election year since it is a period where there are heightened risks of disinformation, misinformation, scams, and fraud aimed at influencing election outcomes.

As AI systems continue to improve in their ability to produce content that closely mimics reality, the collective effort of the tech industry to develop and adhere to standards for identifying AI-generated content becomes crucial.

In the coming months, we will likely see common technical standards continue to emerge as service providers unite to mitigate the risk and damage that can be done through AI. Tech giants will have to unite to solve these challenges presented by AI. Although most tools they’ve created live within a walled garden, their outputs can be shared across any social media platform or other medium.

There will need to be a collaborative approach, and possibly a few more instances of deepfakes going viral and causing harm, for more players—and legislators—to jump into action and update policies in a way that protects individuals from these new AI attack vectors that we see come into play.

In order for artificial intelligence (AI) to work right within the law and thrive in the face of growing challenges, it needs to integrate an enterprise blockchain system that ensures data input quality and ownership—allowing it to keep data safe while also guaranteeing the immutability of data. Check out CoinGeek’s coverage on this emerging tech to learn more why Enterprise blockchain will be the backbone of AI.

Watch: What does blockchain and AI have in common? It’s data

07-02-2025

07-02-2025