|

Getting your Trinity Audio player ready...

|

The U.S. government is set to introduce its first regulations for artificial intelligence (AI) development, with the Biden administration releasing an executive order this week. The new standards will force AI system developers to report to the Federal Government on their progress, and introduce standards for testing before public release while ensuring the U.S. stays at the technology’s forefront.

The order mentions the words “safe,” “secure,” and “trustworthy” multiple times, but not “transparency” or “oversight.” It’s unclear what kind of access the public will have to information on how large corporations build AI systems or the datasets used to train them. Given the impact AI will inevitably have on society, there’s a strong argument for this training data to be recorded and timestamped on a public blockchain to give those outside the government a clearer understanding of how AIs will “behave.”

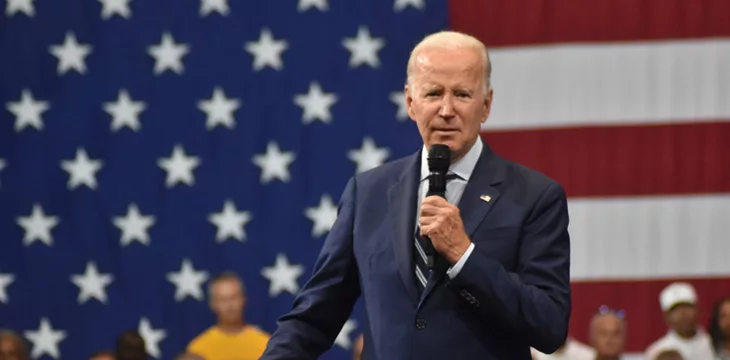

“To realize the promise of AI and avoid the risk, we need to govern this technology. In the wrong hands, AI can make it easier for hackers to exploit vulnerabilities in the software that makes our society run,” President Joe Biden said.

AI executive order the first of its kind in the United States

The Biden administration claimed the order was “the most sweeping actions ever taken to protect Americans from the potential risks of AI systems.” In particular, it addresses AI-related risks to national security and health, individual privacy and safety, and the possibility of fraud and deception in AI content.

It also directs the National Security Council and White House Chief of Staff to draw up a National Security Memorandum to execute further actions on AI and security—to “ensure that the United States military and intelligence community use AI safely, ethically, and effectively in their missions,” as well as counter any adversarial military use of AI in other countries.

The order addresses two kinds of risks: those presented by domestic AI development and those from AIs built outside the U.S. that may be weaponized to attack U.S. systems, endanger public health, or defraud/deceive the public. The Biden administration said it hopes to build relationships with teams in ideologically-aligned foreign countries to promote similar sets of standards (or, in other words, keep a close eye on what they’re doing).

Like many other regulations in recent times, it also seeks to promote “equity” and protect the civil rights of vulnerable minorities—warning landlords, the criminal justice system, employers, and federal contractors not to use AI profiling algorithms that may result in discrimination.

On the more positive side, the new rules will seek to promote AI benefits in health care, workplace training, and education, e.g., by developing affordable and life-saving drugs and assisting educators with AI-based personal tutoring.

To promote innovation and attempt to maintain a leading role for the U.S., a pilot National AI Research Resource will provide students and researchers greater access to AI resources and introduce a grants program. Small developers and entrepreneurs will have more access to technical assistance and resources.

Regulate, pause, or monitor?

Though the concept of “artificial intelligence” has been around for decades in the computer science world and extrapolated in science fiction, it’s only recently that the public has begun to experience impressive and sometimes alarming, examples of its abilities. The nature of the technology means these results feed into its own exponential growth, and there have been calls for varying degrees of regulation, from more oversights to outright bans.

In March 2023, an organization called the Future of Life Institute issued an open letter recommending a six-month pause on AI development for systems more advanced than OpenAI’s GPT-4 (note: GPT-4 was just added to the public version of OpenAI’s popular ChatGPT this week). Asking “Should we?” it said that as AI systems came to develop abilities that are “human-competitive at general tasks,” risks emerge that even advanced developers would create systems they couldn’t understand, leading ultimately to humanity’s obsolescence.

The institute’s letter was signed by over 1,000 experts in the technology and artificial intelligence research community, most notably Elon Musk.

AI dataset audibility is one solution

Given these concerns and the vast list of “known and unknown-unknowns” associated with AI progress, it will most likely welcome some kind of governmental regulation.

However, there is no worldwide body with the ability to regulate AI development in every country or enforce limits everywhere. As has happened with biological research, any research considered “edgy” or “dangerous” is bound to move to jurisdictions beyond the reach of local rules.

A better (though by no means failsafe) solution would be to maintain training datasets on a trusted global ledger where anyone can audit them and keep track of who’s using them. With blockchain microtransactions and tokenized data, it’d be far easier to manage the human input that ultimately defines what machines learn. Once again, the blockchain used for this purpose must be fast, scalable, and open—as well as trusted as a “universal source of truth” worldwide.

AI researcher and lecturer Konstantinos Sgantzos has advocated for training data to be kept in such a ledger where it can be parsed by any AI, anywhere. In a recent Twitter/X Spaces session with fellow researcher Ian Grigg, Sgantzos said the ability to audit these datasets is more important than the code forming neural networks and machine-learning systems themselves.

This is going to be an interesting conversation!https://t.co/3G6hWgbS7b

— Konstantinos Sgantzos (@CostaSga) October 28, 2023

“What happens to the dataset, though? We don’t know what the dataset looks like. We don’t know how the model is producing the outputs,” he said. “This is a black box. And what happens to the proprietary datasets, like the OpenAI one, or similar companies, or isolated companies using datasets where we don’t know what they contain?”

Sgantzos added that he personally dislikes the term “artificial intelligence” since intelligence itself is a concept that no one truly understands. Keeping the well-known acronym, he prefers “analysis of information” to distinguish between the varying levels of AI algorithms in consumer products like Alexa and YouTube and those with more sophisticated capabilities.

In order for artificial intelligence (AI) to work right within the law and thrive in the face of growing challenges, it needs to integrate an enterprise blockchain system that ensures data input quality and ownership—allowing it to keep data safe while also guaranteeing the immutability of data. Check out CoinGeek’s coverage on this emerging tech to learn more why Enterprise blockchain will be the backbone of AI.

Watch: Demonstrating the potential of blockchain’s fusion with AI

07-13-2025

07-13-2025