|

Getting your Trinity Audio player ready...

|

A group of researchers has recorded successes in a new specialized classifier capable of identifying AI-generated academic papers with impressive levels of accuracy.

The machine learning tool, developed by a team of researchers spanning multiple universities, stands apart from other classifiers by relying on writing styles rather than predictive text patterns. According to a report by Nature, the research team trained the AI detection tool on over 100 published introductions from 10 leading chemistry journals designed to represent human-generated text.

The decision to use introductions from academic papers was an easy choice given the ease for AI tools to generate introductions after accessing background literature, according to Heather Desaire, one of the project’s researchers.

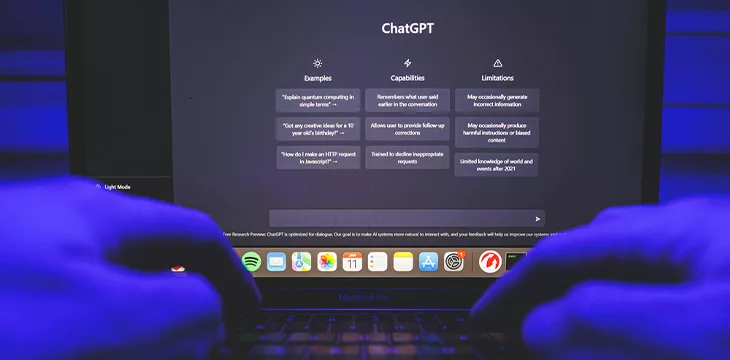

When deployed to identify AI-generated text, the researchers noted a 100% accuracy in spotting texts generated by OpenAI’s ChatGPT-3.5. The tool recorded the same success rate in spotting AI-generated texts stemming from ChatGPT-4 compared to other classifiers in the ecosystem.

“By contrast, the AI detector ZeroGPT identified AI-written introductions with an accuracy of only about 35-65%, depending on the version of ChatGPT used and whether the introduction had been generated from the title or the abstract of the paper,” read the report.

The researchers claimed that one classifier released by OpenAI recorded a 10-55% accuracy in identifying AI-generated text in research papers, citing a reliance on predictive text patterns as potential reasons for defaults. Despite a focus on chemistry papers, the new AI detector recorded unprecedented accuracy in identifying AI-generated papers in other academic fields.

“Most of the field of text analysis wants a really general detector that will work on anything,” said Desaire. “We were really going after accuracy.”

However, the tool falls short of identifying AI-generated text outside of academic spheres, recording lackluster results when faced with news articles. The researchers noted that the classifier’s inefficiencies outside of academic research lie in its specialized training in scientific journal papers.

Easy to create, difficult to spot

As AI developers race to develop advanced generative models, creating a distinction between human-generated and AI-generated has become an arduous task. OpenAI was forced to shut down its AI detection tool over concerns of low accuracy with the firm detailing plans for a new classifier.

“We are working to incorporate feedback and are currently researching more effective provenance techniques for text, and have made a commitment to develop and deploy mechanisms that enable users to understand if audio or visual content is AI-generated,” said OpenAI in a statement.

Experts have mooted the possibility of using blockchain technology as a means to identify AI-generated content, while other tech firms are exploring the possibilities of an “invisible watermark” as a means to label AI content.

In order for artificial intelligence (AI) to work right within the law and thrive in the face of growing challenges, it needs to integrate an enterprise blockchain system that ensures data input quality and ownership—allowing it to keep data safe while also guaranteeing the immutability of data. Check out CoinGeek’s coverage on this emerging tech to learn more why Enterprise blockchain will be the backbone of AI.

Watch: AI, ChatGPT, and Blockchain

07-05-2025

07-05-2025